Reputation

Badges 1

53 × Eureka!Results of a bit more investigation:

The ClearML example does use the Pytorch dist package but none of the DistributedDataParallel functionality, instead, it reduces gradients “manually”. This script is also not prepared for torchrun as it launches more processes itself (w/o using the multiprocessing of Python or Pytorch.)

When running a simple example (code attached...

Hi @<1523703436166565888:profile|DeterminedCrab71> and @<1523701070390366208:profile|CostlyOstrich36> , coming back to this after a while. It actually seems to be related to Google Cloud permissions:

- The images in the ClearML dashboard to not show as discussed above

- If I copy the image url (coming out as something like None and open it in another tab where I’m logged into my Google Account, the image loads

- If I do t...

Also as an update, I tried to start the containers one by one and resolve the errors that came up. The only real one I found was that Redis crashed when loading the previous data base. Since I figured I wouldn't necessarily need the cache, I cleared the dump file, then all the services started - this all refers to the old server version.

When trying the same with the most recent docker-compose.yml , the services all started by themselves, but when I start the full docker compose, the das...

I meant maybe me activating offline mode, somehow changes something else in the runtime and that in turn leads to the interruption. Let me try to build a minimal reproducible version 🙂

Although, some correction here: While the secret is indeed hidden in the logs, it is still visible in the “execution” tab of the experiment, see two screenshots below.

One again I set them withtask.set_base_docker(docker_arguments=["..."])

Yes totally, but we’ve been having problems of getting these GPUs specifically (even manually in the EC2 console and across regions), so I thought maybe it’s easier to get one big one than many small ones, but I’ve never actually checked if that is true 🙂 Thanks anyhow!

@<1523701070390366208:profile|CostlyOstrich36> thank you, now everything works so far!

Last thing: Is there any way to change all the links in the new ClearML server such that an artifact that was previous under s3://… is now taken from gs://… ? The actual data is already available under the gs:// link of course

Happy to and thanks!

New state: After starting with the old YML again, the web app looks new (presumably because the image allegroai/clearml:latest is used), but the server version still lists WebApp: 2.1.0-664 • Server: 1.9.2-317 • API: 2.23 .

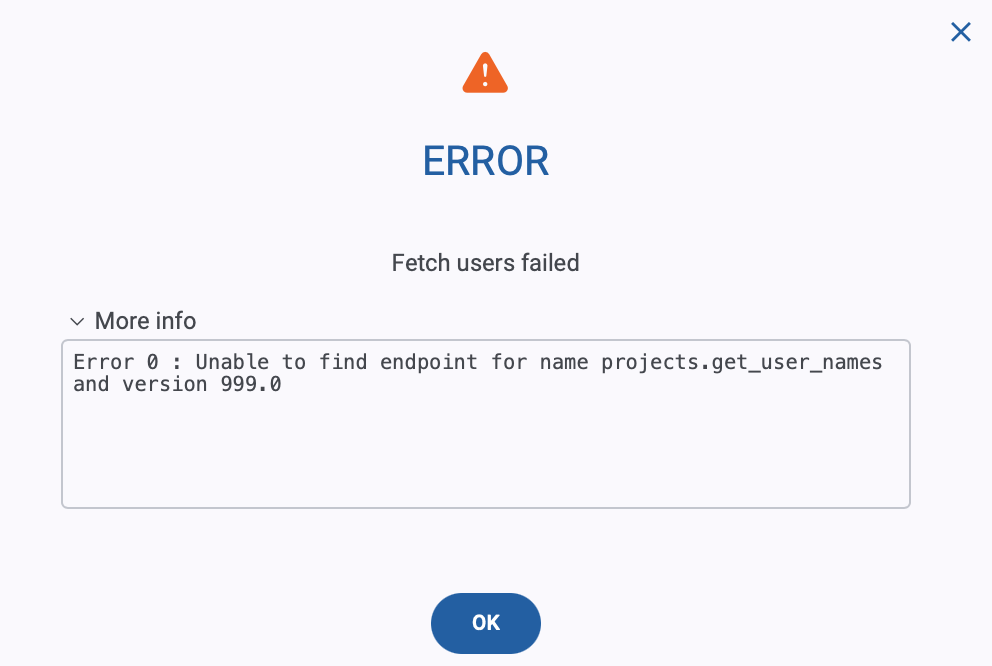

Creating tasks and reporting things works again, but I regularly see the UI error shown attached. Any way to resolve things?

Hey guys, really appreciating the help here!

So what I meant by “it does work” is that the environment variables go through to the container, I can use them there, everything runs.

The remaining problem is that this way, they are visible in the ClearML web UI which is potentially unsafe / bad practice, see screenshot below.

Sorry that these issues go quite deep and chaotic - we would appreciate any help or ideas you can think of!

AgitatedDove14 maybe to come at this from a broader angle:

Is ClearML combined with DataParallel or DistributedDataParallel officially supported / should that work without many adjustments? If so, would it be started via python ... or via torchrun ... ? What about remote runs, how will they support the parallel execution? To go even deeper, what about the machines started via ClearML Autoscaler? Can they either run multiple agents on them and/or start remote distribu...

Yes, when the WebUI prompted me for them. They also seem to work since images in Debug Samples (also in S3) show up after I entered them.

Also, I can see that the plot is also saved in Debug Samples after explicit reporting, even though I don’t set report_interactive=False

I actually wanted to load a specific artifact, but didn’t think of looking through the tasks output models. I have now changed to that approach which feels much safer, so we should be all done here. Thanks!

So AgitatedDove14 if we use the CLEARML_OFFLINE_MODE environment variable instead the program runs through again.

The only thing is that now we get errors of the form

` 0%| | 0/18 [00:00<?, ?image/s]ClearML running in offline mode, session stored in /home/manuel/.clearml/cache/offline/offline-167ceb1cd3c946df8abc7206b781b486

2022-11-07 07:49:06,986 - clearml.metrics - WARNING - Failed uploading to /home/manuel/.clearml/cache/offline/offline-167ceb1cd3c946df8abc7206b781b486/...

Hi @<1523701070390366208:profile|CostlyOstrich36> , thank you for answering!

We are upgrading from v. 1.9 or so (I think) to the most recent one.

Attached below are 3 logs from api server, elastic search and file server - does this help to debug?

Yes for example, or some other way to get credentials over to the container safely without them showing up in the checked-in code or web UI

Well duh, now it makes total sense! Should have checked docs or examples more closely 🙏

Yes if that works reliably then I think that option could make sense, it would have made things somewhat easier in my case - but this is just as good.

So the container itself gets deleted but everything is still cached because the cache directory is mounted to the host machine in the same place? Makes absolute sense and is what I was hoping for, but I can’t confirm this currently - I can see that the data is reloaded each time, even if the machine was not shut down in between. I’ll check again to find the cached data on the machine

Yes makes sense, it sounded like that from the start. Luckily, the task.flush(...) way seems to work for now 🙂

Ok great! I will debug starting with a simpler training script.

Just as a last question, is torchrun also supported rather than the (now deprecated but still usable) torch.distributed.launch ?

Sorry to ask again, but the values are still showing up in the WebUI console logs this way (see screenshot.)

Here is the config that I paste into the EC2 Autoscaler Setup:

` agent {

extra_docker_arguments: ["-e AWS_ACCESS_KEY_ID=XXXXXX", "-e AWS_SECRET_ACCESS_KEY=XXXXXX"]

hide_docker_command_env_vars {

enabled: true

extra_keys: ["AWS_SECRET_ACCESS_KEY"]

parse_embedded_urls: true

}

} `Never mind, it came from setting the options wrong, it has to be ...

@<1523701070390366208:profile|CostlyOstrich36> , you mean the ClearML server needs access to Cloud Storage in its clearml.conf file?

Just tried it by creating a ~/clearml.conf file and setting the entry as below - unfortunately the same result. I’ve re-started the docker-compose of course.

Did I miss something here?

google.storage {

credentials_json: "/home/.../my-crendetials.json"

}

When running on our bigger research repository which includes saving checkpoints and uploading to S3, the training ends with errors as shown below and a Killed message for the main process (I do not abort the main process manually):

2023-01-26 17:37:17,527 INFO: Save the latest model.

2023-01-26 17:37:19,158 - clearml.storage - INFO - Starting upload: /tmp/.clearml.upload_model_cvqpor8r.tmp => glass-clearml/RealESR/Glass-ClearML Demo/[Lambda] FMEN distributed check, v10 fileserver u...Ok so actually if I run task.flush(wait_for_uploads=True) at the end of the script it works ✔

Hi @<1523701205467926528:profile|AgitatedDove14> , so I’ve managed to reproduce a bit more.

When I run very basic code via torchrun or torch.distributed.run then multiple ClearML tasks are created and visible in the UI (screenshot below). The logs and scalars are not aggregated but the task of each rank reports its own.

If however I branch out via torch.multiprocessing like below, everything works as expected. The “script path” just shows the single python script, all logs an...

Yes and yes - is that the issue and it might likely go away if we host it via HTTPS?

To recap, the server started up on GCP as expected before migrating the data over. The migration was done by

- deleting the current data

sudo rm -fR /opt/clearml/data/* - unpacking the backup

sudo tar -xzf ~/clearml_backup_data.tgz -C /opt/clearml/data - setting permissions

sudo chown -R 1000:1000 /opt/clearml

Won’t they be printed out as well in the Web UI? That shows the full Docker command for running the task right…