lol I ended up just buying the domain. it is much easier to pay the 10$ : ))

@<1726047624538099712:profile|WorriedSwan6> could you please run a kubectl describe pod of the clearml webserver Pod and dump the output here?

Oh, I see, cause you are using a self-signed certificate, correct?

@<1726047624538099712:profile|WorriedSwan6> - When deploying the ClearML Agent, could you try passing the external fileserver url to the configuration you previously mentioned? Like this:

agentk8sglue:

fileServerUrlReference: "

"

Hi Amir, could you please share the values override that you used to install the clearml server helm chart?

sure, here:

clearml:

defaultCompany: "bialek"

cookieDomain: "bialek.dev"

nameOverride: "clearml"

fullnameOverride: "clearml"

apiserver:

existingAdditionalConfigsSecret: "eso-clearml-users"

additionalConfigs:

clearml.conf: |

agent {

file_server_url:

}

service:

type: ClusterIP

ingress:

enabled: true

ingressClassName: "bialek-on-prem"

hostName: "clearml-api.bialek.dev"

tlsSecretName: "tls-clearml-apiserver"

annotations:

cert-manager.io/cluster-issuer: bialek-dev-issuer

path: "/"

nodeSelector:

kubernetes.io/hostname: clearml-server

tolerations:

- key: "server4clearml"

operator: "Equal"

value: "true"

effect: "NoSchedule"

fileserver:

service:

type: ClusterIP

ingress:

enabled: true

ingressClassName: "bialek-on-prem"

hostName: "clearml-file.bialek.dev"

tlsSecretName: "tls-clearml-fileserver"

annotations:

cert-manager.io/cluster-issuer: bialek-dev-issuer

path: "/"

storage:

enabled: true

data:

size: 100Gi

nodeSelector:

kubernetes.io/hostname: clearml-server

tolerations:

- key: "server4clearml"

operator: "Equal"

value: "true"

effect: "NoSchedule"

webserver:

service:

type: ClusterIP

ingress:

enabled: true

ingressClassName: "bialek-on-prem"

hostName: "clearml.bialek.dev"

tlsSecretName: "tls-clearml-webserver"

annotations:

cert-manager.io/cluster-issuer: bialek-dev-issuer

path: "/"

nodeSelector:

kubernetes.io/hostname: clearml-server

tolerations:

- key: "server4clearml"

operator: "Equal"

value: "true"

effect: "NoSchedule"

extraEnvVars:

- name: WEBSERVER__fileBaseUrl

value: "

"

- name: WEBSERVER__useFilesProxy

value: "true"

redis:

architecture: replication

master:

nodeSelector:

kubernetes.io/hostname: clearml-server

tolerations:

- key: "server4clearml"

operator: "Equal"

value: "true"

effect: "NoSchedule"

persistence:

enabled: true

accessModes:

- ReadWriteOnce

size: 5Gi

## If undefined (the default) or set to null, no storageClassName spec is set, choosing the default provisioner

storageClass: null

replica:

replicaCount: 2

nodeSelector:

kubernetes.io/hostname: clearml-server

tolerations:

- key: "server4clearml"

operator: "Equal"

value: "true"

effect: "NoSchedule"

mongodb:

updateStrategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 50%

maxUnavailable: 50%

podSecurityContext:

enabled: true

fsGroup: 1001

resources: {}

enabled: true

nodeSelector:

kubernetes.io/hostname: clearml-server

tolerations:

- key: "server4clearml"

operator: "Equal"

value: "true"

effect: "NoSchedule"

architecture: replicaset

replicaCount: 1

arbiter:

enabled: false

pdb:

create: true

podAntiAffinityPreset: soft

elasticsearch:

replicas: 1

minimumMasterNodes: 1

antiAffinityTopologyKey: ""

antiAffinity: ""

nodeAffinity: {}

nodeSelector:

kubernetes.io/hostname: clearml-server

tolerations:

- key: "server4clearml"

operator: "Equal"

value: "true"

effect: "NoSchedule"

volumeClaimTemplate:

accessModes: ["ReadWriteOnce"]

resources:

requests:

storage: 30Gi

extraVolumes:

- name: nfs

nfs:

path: /path/to/nfs

server: <some_private_ip>

extraVolumeMounts:

- name: nfs

mountPath: /mnt/backups

readOnly: false

esConfig:

elasticsearch.yml: |

xpack.security.enabled: false

path.repo: ["/mnt/backups"]

Hey @<1729671499981262848:profile|CooperativeKitten94> , but this is internal domain, which cause an issue with the SSL when trying to upload data to the server:

2025-05-07 18:36:22,421 - clearml.storage - ERROR - Exception encountered while uploading HTTPSConnectionPool(host='clearml-file.bialek.dev', port=443): Max retries exceeded with url: / (Caused by SSLError(SSLCertVerificationError(1, '[SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed: unable to get local issuer certificate (_ssl.c:1007)')))

..

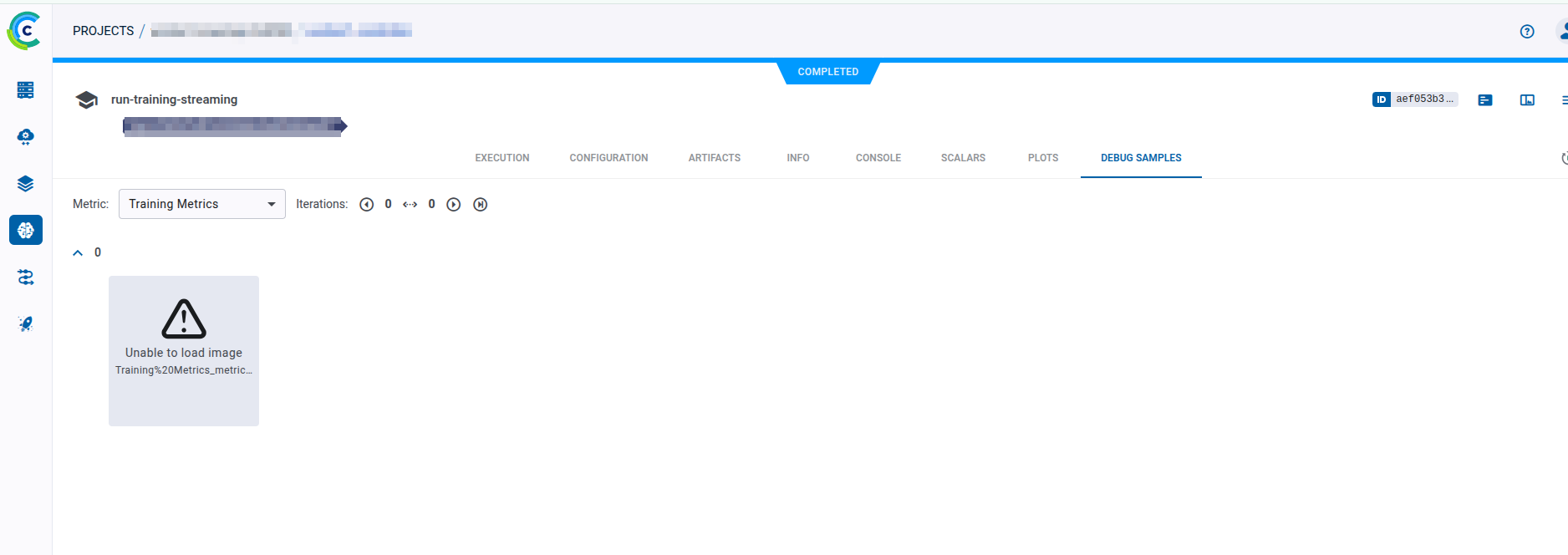

So, when the UI gets a debug image, it gets the URL for that image, which was created in runtime by the running SDK (by the Agent, in this case), so using the fileserver URL provided by the agent.

You will need to pass the external reference:

agentk8sglue:

fileServerUrlReference: "

"

and work around the self-signed cert. You could try mounting your custom certificates to the Agent using volumes and volumeMounts, storing your certificate in a configmap or similarly

root@master:/home/bialek# kubectl -n clearml describe po clearml-webserver-847d7c947b-hfk57

Name: clearml-webserver-847d7c947b-hfk57

Namespace: clearml

Priority: 0

Service Account: clearml-webserver

Node: clearml-server/secretip

Start Time: Sun, 04 May 2025 08:42:17 +0300

Labels: app.kubernetes.io/instance=clearml-webserver

app.kubernetes.io/name=clearml

pod-template-hash=847d7c947b

Annotations: cni.projectcalico.org/containerID: ebe61ed8108db66e290732f0039ccd346ef3c47fc4a71c0e9b3c70588c9f4f18

cni.projectcalico.org/podIP: secretip

cni.projectcalico.org/podIPs: secretip

Status: Running

IP: secretip

IPs:

IP: secretip

Controlled By: ReplicaSet/clearml-webserver-847d7c947b

Init Containers:

init-webserver:

Container ID:

0

Image: docker.io/allegroai/clearml:2.0.0-613

Image ID: docker.io/allegroai/clearml@sha256:713ae38f7dafc9b2be703d89f16017102f5660a0c97cef65c793847f742924c8

Port: <none>

Host Port: <none>

Command:

/bin/sh

-c

set -x; while [ $(curl -sw '%{http_code}' "

" -o /dev/null) -ne 200 ] ; do

echo "waiting for apiserver" ;

sleep 5 ;

done

State: Terminated

Reason: Completed

Exit Code: 0

Started: Sun, 04 May 2025 08:42:27 +0300

Finished: Sun, 04 May 2025 08:44:27 +0300

Ready: True

Restart Count: 0

Limits:

cpu: 10m

memory: 64Mi

Requests:

cpu: 10m

memory: 64Mi

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-wmf25 (ro)

Containers:

clearml-webserver:

Container ID:

2

Image: docker.io/allegroai/clearml:2.0.0-613

Image ID: docker.io/allegroai/clearml@sha256:713ae38f7dafc9b2be703d89f16017102f5660a0c97cef65c793847f742924c8

Port: 80/TCP

Host Port: 0/TCP

Args:

webserver

State: Running

Started: Sun, 04 May 2025 08:44:28 +0300

Ready: True

Restart Count: 0

Limits:

cpu: 2

memory: 1Gi

Requests:

cpu: 100m

memory: 256Mi

Liveness: exec [curl -X OPTIONS

] delay=0s timeout=1s period=10s #success=1 #failure=3

Readiness: exec [curl -X OPTIONS

] delay=0s timeout=1s period=10s #success=1 #failure=3

Environment:

NGINX_APISERVER_ADDRESS:

NGINX_FILESERVER_ADDRESS:

Mounts:

/mnt/external_files/configs from webserver-config (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-wmf25 (ro)

Conditions:

Type Status

PodReadyToStartContainers True

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

webserver-config:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: clearml-webserver-configmap

Optional: false

kube-api-access-wmf25:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: Burstable

Node-Selectors: kubernetes.io/hostname=clearml-server

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

server4clearml=true:NoSchedule

Events: <none>

root@master:/home/bialek#