Reputation

Badges 1

72 × Eureka!I think this is the right approach, let me have a deeper look

thanks @<1724235687256920064:profile|LonelyFly9>

api calls behaves much better

no problem to query tasks in other projects

AgitatedDove14 indeed there are few sub projects

do you suggest to delete those first?

hey @<1688125253085040640:profile|DepravedCrow61>

I can confirm upgrading to 2.1 did the the work!

(closed the issue)

Thanks!

I built an basic nginx container

` FROM nginx

COPY ./default.conf /etc/nginx/conf.d/default.conf

COPY ./includes/ /etc/nginx/includes/

COPY ./ssl/ /etc/ssl/certs/nginx/ copied the signed certificates and the modified nginx deafult.conf `

the important part is to modify the compose file to redirect all traffic to nginx container

` reverse:

container_name: reverse

image: reverse_nginx

restart: unless-stopped

depends_on:

- apiserver

- webserver

- fil...

OK I got everything to work

I think this script can be useful to other people and will be happy to share

@<1523701070390366208:profile|CostlyOstrich36> is there some repo I fork and contribute?

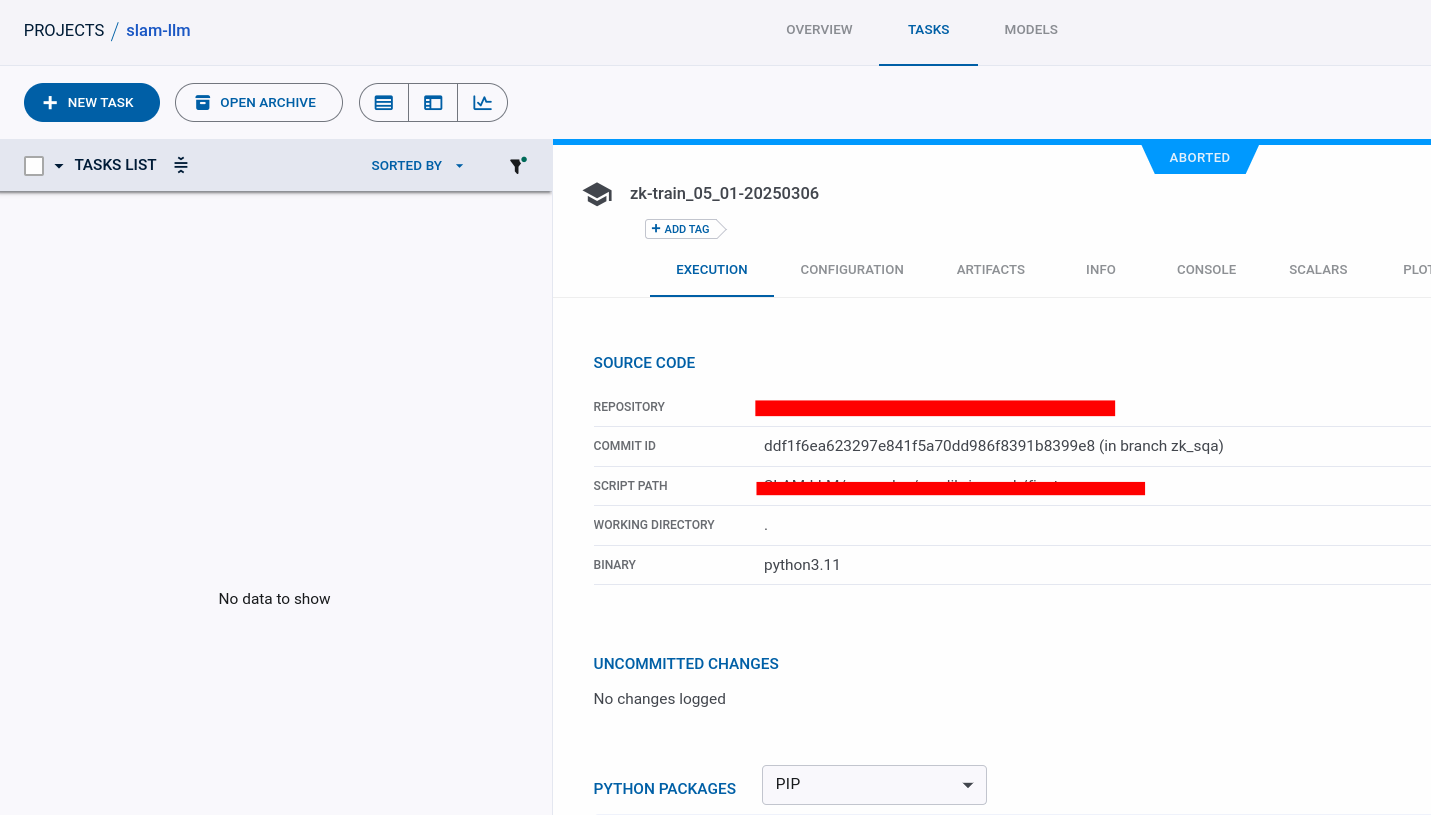

@<1876800977114238976:profile|ShakyCrocodile77> perhaps can elaborate

e.g

what is diff between jobs stopped vs. aborted?

it's sig kill sent from user?

how does clearml set if job is stopped or aborted?

same basic job not gets overwritten, but created new one every time

so I think I'm in the right direction

adding verify= and pointing to my CA.pem looks like the right approach

now, how do I use it with ClearML API?

cleanup_service

for task in tasks:

try:

deleted_task = Task.get_task(task_id=task.id)

print (deleted_task.name)

deleted_task.delete(

delete_artifacts_and_models=True,

skip_models_used_by_other_tasks=True,

raise_on_error=False

)

it throw down the SSL error,...

correct, but!

I wrote a script that pulls tasks and limit for user

so I'm looking for users to knows their own id in advance

@<1523701070390366208:profile|CostlyOstrich36> sorry for not being clear enough

when is next version of clearml-server will be released? I can see last version is from August, is there any ETA for new release in upcoming 1-2 month?

hey @<1523701070390366208:profile|CostlyOstrich36>

there are no issues, I had simulated one in order to see how my automation behaves when ClearML is down

I'm looking to see how to make sure my code behaves nicely in case ClearML is down

so I'm looking for why it does not times out gracefully, when for some reason ClearML server is unreachable (network issues or invalid credentials)

I manage clearml-server for many users

wrote a script that pulls tasks (for review - pending deletion) and limit for specific user (saw the REST can limit only by user id )

so user will need to provide the script his own user id in advance

I guess my options are:

- maintain stastic list of users (which I get from

users.get_allendpoint) - add to my script another query that will match user name to user id, before running the

tasks_get.allfor specific userid

SuccessfulKoala55 where does the SDK stores the cache?

we have an cluster with shared storage so all computes nodes that is running the jobs has same storage

should I assume it will use the cache and overwrites identical jobs?

trying to reproduce this but still every new and same jobs gets new task ID

SuccessfulKoala55 any clue?

how do you know the ID?

I'm looking for this ...

@<1523701070390366208:profile|CostlyOstrich36> unfortunately, this is not the behavior we are seeing

same exact issue happen tonight

on epoch number 53 ClearML were shut down, the job did not continue to epoch 54 and eventually got killed with watchdog timer

I can see tasks in the project, but nothing for my user

I'm looking at iptables configuration that was done by other teams

trying to find which rule blocks clearml

(all worked when iptables disabled)

the way I do pagination is wrong

@<1523701070390366208:profile|CostlyOstrich36> might throw some champions tip over here 🙂

trying to use projects.get_all to pull all my projects into single file

and there are more then 500 ...

didn't do that test

I usually wait for first job to finish before I start new one

looks like I can't interact with fileserver ?

iptables -A INPUT -p tcp --dport 8080 -j ACCEPT

iptables -A INPUT -p tcp --dport 8008 -j ACCEPT

iptables -A INPUT -p tcp --dport 8081 -j ACCEPT

if I use the URL directly" None

I see it (see the list to the left show no data as well )

in case this will help someone else, I did not had root access to the training machine to add the cert to store

you can point your python to your own CA using:

export CURL_CA_BUNDLE=/path/to/CA.pem