Reputation

Badges 1

31 × Eureka!gotit. any solution where the credentials wouldnt be visible to everyone?

Thanks AgitatedDove14 . Does this go in the local clearml.conf file w/ each user's credentials, or in the conf file for the server?

the 2nd option looks good. would everyone's credentials be displayed on the server though?

yes, on my windows machine I am running:cloned_task = Task.clone(source_task=base_task, name="Auto generated cloned task") Task.enqueue(cloned_task.id, queue_name='test_queue')I see the task successfully start in the clearml server. In the installed packages section it includes pywin32 == 303 even though that is not in my requirements.txt.

In the results --> console section, I see the agent is running and trying to install all packages, but then stops at pywin32. Some lines from t...

yes. i think the problem is that its trying to recreate the environment the task was spun up on - which was on a windows machine - on a linux ec2 instance

yall thought of everything. this fixed it! Having another issue now, but will post seperately

ok, i suppose that will have to do for now. thank you!

huh. i really like how easy it is w/ the automated TB. Is there a way to still use the auto_connect but limit the amount of debug imgs?

This is what the instance state looks like, as logged by clearml:

Also, this would change it globally. is there a way to set it for specific jobs and metrics?

We were able to find an error from the autoscalaer agent:

Stuck spun instance dynamic_worker:clearml-agent-autoscale:p2.xlarge:i-015001a93e0910a09 of type clearml-agent-autoscale

2022-04-19 19:16:58,339 - clearml.auto_scaler - INFO - Spinning down stuck worker: 'dynamic_worker:clearml-agent-autoscale:p2.xlarge:i-015001a93e0910a09

Thanks! I installed CUDA/CuDNN on the image and now the GPU is being utilized.

TimelyPenguin76 not sure what you mean by "as a service or via the apps", but we are self-hosting it. Does that answer the question?

Also, not sure what you mean by which "clearml version". How do we check this? The clearml python package is 1.1.4. Is that what you wanted?

is there a way to explicitly make it some install certain packages, or at least stick to the requirements.txt file rather than the actual environment

is there a way to get the instance's external IP address from clearml? i wouldve thought it would be in the info tab, but its not

no 64 bit. but do you mean the PC where I am spinning the task up or the machine where I am running the task

Im thinking it may have something to do withUsing cached repository in "/home/ubuntu/.clearml/vcs-cache/ai_dev.git.42a0e941ddbf5c69216f37ceac2eca6b/ai_dev.git"We tried to reset the machines but the cache is still there. any idea how to clear it?

@<1856144902816010240:profile|SuccessfulCow78> can you please help provide

@<1855782498290634752:profile|AppetizingFly3>

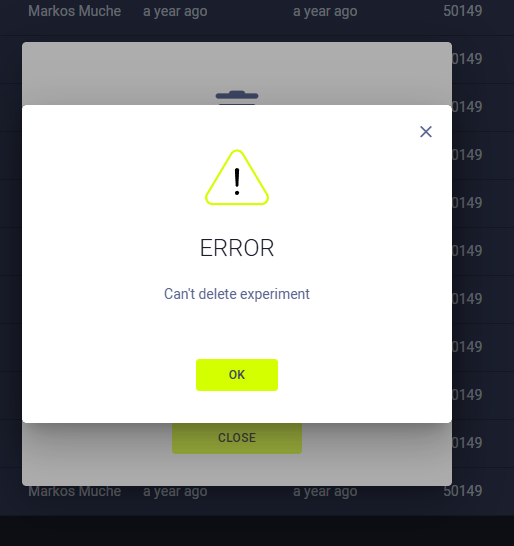

It seems to an a memory issue w/ the VM that hosts clearml filling up. I am trying to delete some experiments but now i get:

Hi @<1523701070390366208:profile|CostlyOstrich36> .

- At first I was getting alot of errors there showing it couldnt connect to clearml-elastic (

urllib3.exceptions.ReadTimeoutError: HTTPConnectionPool(host='elasticsearch', port='9200'): Read timed out. (read timeout=60)) so i reset both apiserver adn clearml-elastic. - After the reset, when I tried deleting in the frontend, I got more the informative error on the frontend: `General data error (TransportError(503, 'search_phase_executi...

perfect. exactly what i was looking for!