Reputation

Badges 1

23 × Eureka!CostlyOstrich36 Thanks, I tried that, but I get"authenticated": False when using POST/login.supported_modes and this leads to a "Unauthorized (missing credentials)" response when using POST/login.tasks.get_all

Any clues how to authenticate myself?

Hi,

My conclusion is that these errors are probably caused by CUDA version mismatch.

The latest clearml-serving-triton docker container is built for CUDA 11.7

and my machine is configured with CUDA 12.X

Are there any plans to support ClearML serving with CUDA 12.X in the near future?

I figured out that I was using the wrong API call. I accidenta used the backend task.set_project() instead of the front end task.move_to_project() and then all the errors were not informative.

Hi,

that doesn't work for me in the agent queues, because somehow the output_uri gets switched in runtime and then the ClearMLSaver creates a new dirname under the /tmp in the docker container. I solved it by manually specifying the dirname but I'm not happy with not understanding the output_uri behavior and configuration.

It's for collecting experiment results at the analysis stage.

I'm doing:

` from clearml.backend_api import Session

from clearml.backend_api.services import tasks, events, projects

... # get project_ids

session = Session()

res = session.send(tasks.GetAllRequest(project=project_ids)) this response object res is limited to 500 tasks, and no scroll_id ` is provided.

When I do tasks.get_all (which is not the RESTful API I don't have the option to filter by project name

Every experiment includes fitting a model 7 times (once per subject). For a given metric (say train_acc) I would like to group the series into one plot (which is the default setting - good). What I want is to manually provide a name to each series equal to the subject name (Subject 1, Subject 2, etc.)

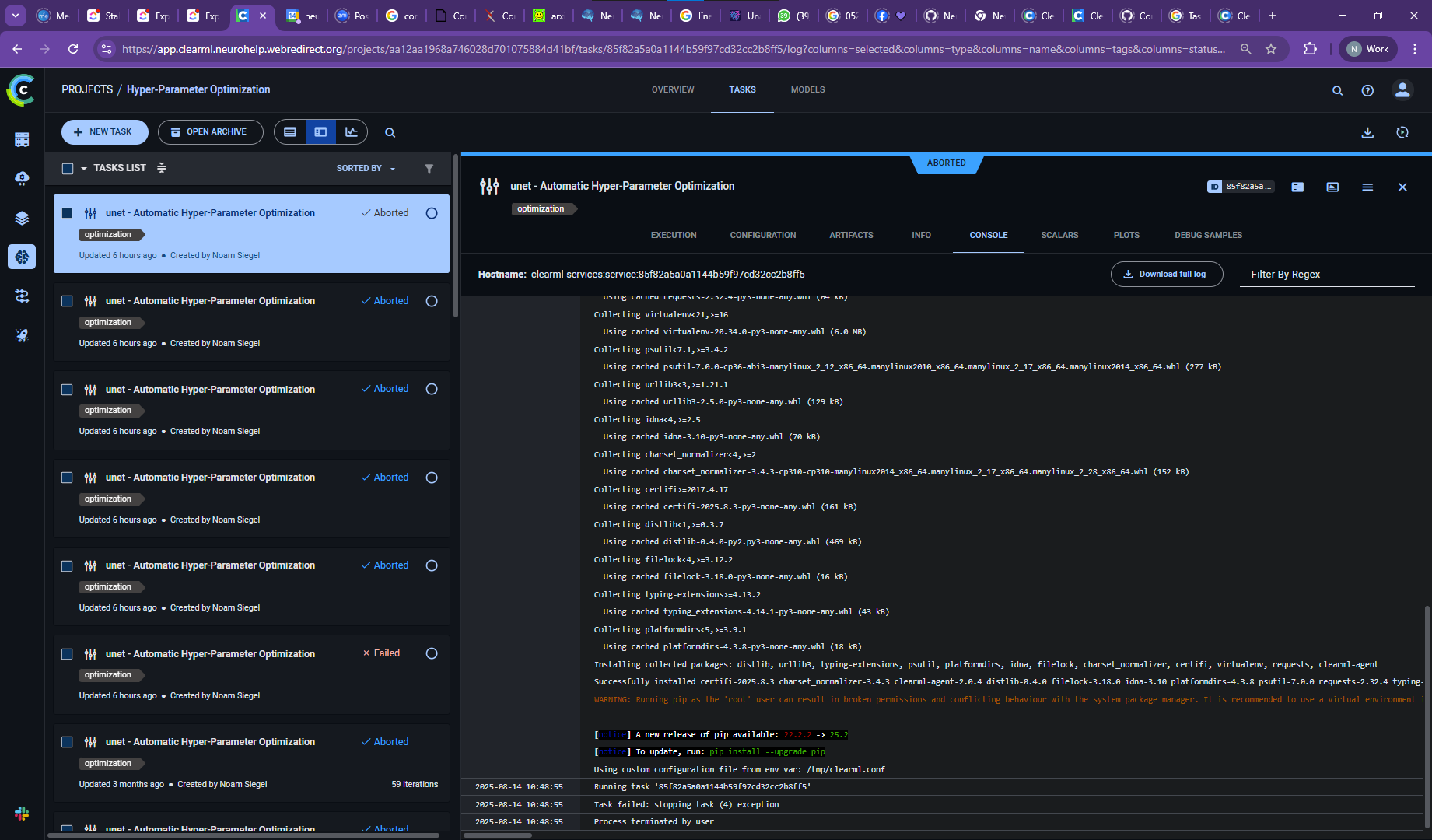

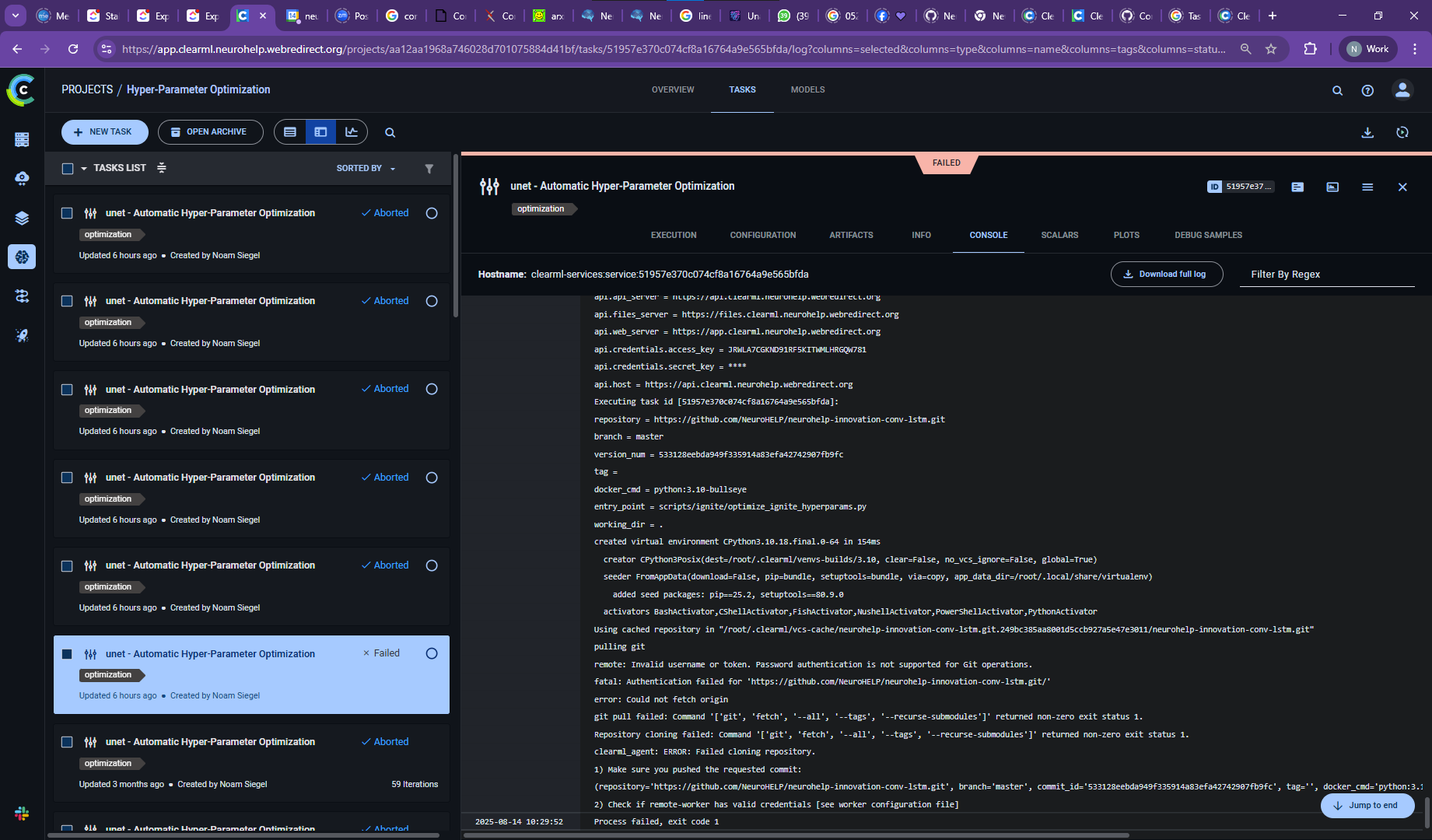

The HPO service itself is also stopping

At first attempt it failed with error then the rest attempts got aborted without error. The Clearml should have the proper git certificate so I'm not sure what the problem is. tried restarting the server but that did not help.

I mean how to set the task name at script onset of remotely running task?

I would like to get all tasks where the project name contains "inference/".

The way I did it is to first filter "get_projects()" then get the task.

For sure there is a way to do this in one get_tasks call?

Thanks 🙂

Some tasks have so many models, it gets really messy without proper names 😅

Hi @<1523701070390366208:profile|CostlyOstrich36>

Unfortunately if I run it from a regular worker it still does not seem to work. The script takes about 10 seconds to run on my machine (locally), then quits. In the Webserver I see the Scheduler Task appear running in the DevOps project, but publishing models does not trigger the requested Test task.

Yes, thanks for your help! Now I need to find how to change the PL naming

Hi @<1523701070390366208:profile|CostlyOstrich36>

I'm running the latest Open server (2.1.0) and the latest clearml-serving package (1.3.5) and still the endpoints screen is blank.

Any updates on when this feature might be integrated or am I missing something?

Thanks! Surprisingly, I didn't see this documented anywhere

Thanks @<1523701205467926528:profile|AgitatedDove14>

Works well

SuccessfulKoala55

Thank you, but I still don't know how to authenticate my REST API session

This is how to start and use an authenticated session

https://clear.ml/docs/latest/docs/faq#:~:text=system_site_packages%20to%20true.-,ClearML%20API,-%23

This is the logging

I tried setting up the Traefik Load Balancer to expose the on-prem server externally using the subdomain configuration.

I see this in /opt/clearml/logs/filerserver.log

[2024-12-11 15:20:09,439] [8] [WARNING] [urllib3.connectionpool] Retrying (Retry(total=239, connect=3, read=240, redirect=240, status=240)) after c

onnection broken by 'NewConnectionError('<urllib3.connection.HTTPConnection object at 0x7ceb8bf3cf70>: Failed to establish a new connection: [Errno

-2] Name or service n...It just gets stuck in this screen and no published models are triggering a base task clone.

What am I missing?