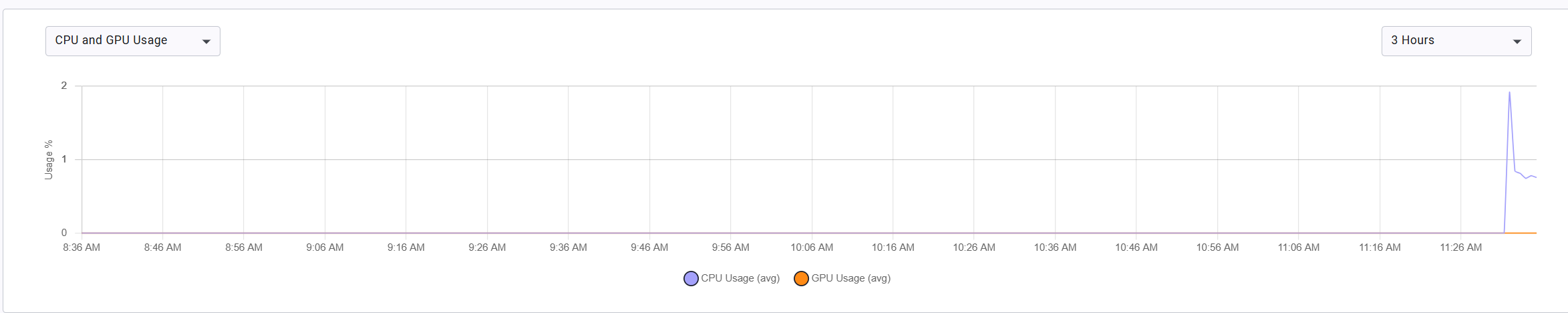

👋 Hi everyone! We’re facing an issue where ClearML workloads run successfully on our Kubernetes cluster (community edition), but never utilize the GPU — des...

4 months ago

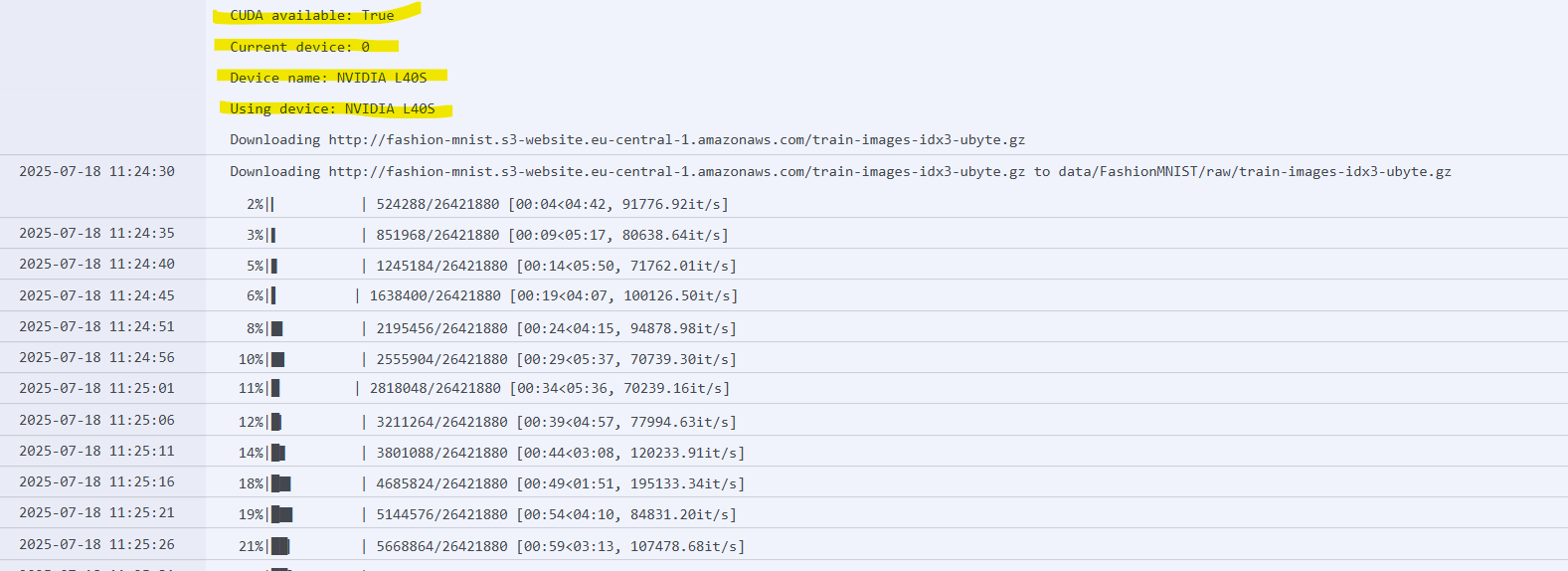

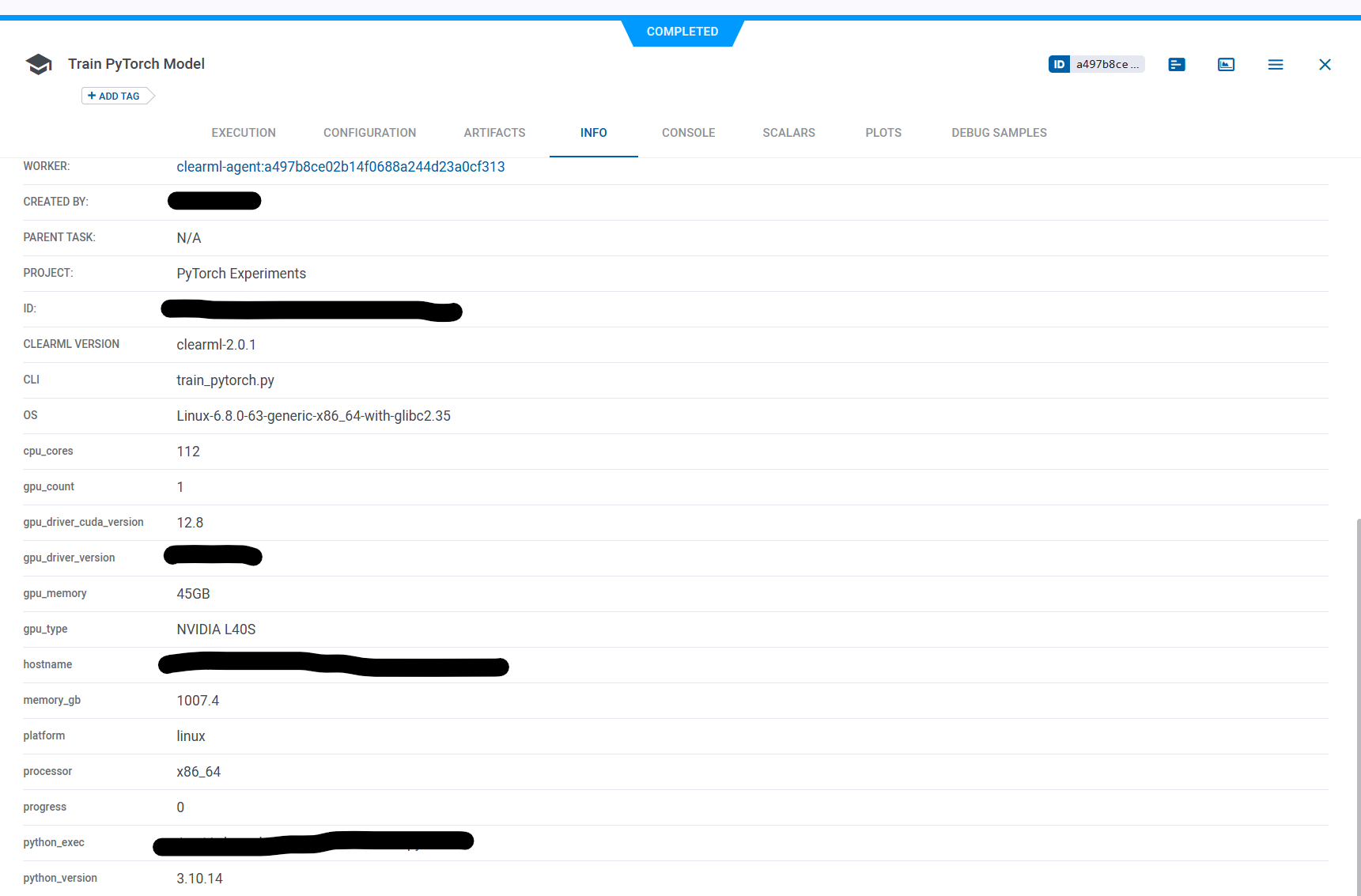

Here are some images for reference.

Hey @<1523701070390366208:profile|CostlyOstrich36> , thanks for the suggestion!

Yes, I did manually run the same code on the worker node (e.g., using python3 llm_deployment.py ), and it successfully utilized the GPU as expected.

What I’m observing is that when I deploy the workload directly on the worker node like that, everything works fine — the task picks up the GPU, logs stream back properly, and execution behaves normally.

However, when I submit the same code using clearml-task f...