Unanswered

[Clearml Serving]

Hi Everyone!

I Am Trying To Automatically Generate An Online Endpoint For Inference When Manually Adding Tag

Well, after testing, I observed two things:

- When using automatic model deployment and training several models to which tag "released" was added, the

model_idin the "endpoints" section of the Serving Service persistently presents the ID of the initial model that was used to create the endpoint (and NOT the one of the latest trained model) (see first picture below ⤵ ). This is maybe the way it is implemented in ClearML, but a bit non-intuitive since, when using automatic model deployment, we would expect the endpoint to be pointing to the latest trained model with tag "released". Now, I suppose this endpoint is anyway pointing to the latest trained model with tag "released", even if themodel_iddisplayed is still the one of the initial model. Maybe ClearML Serving is implemented that way :man-shrugging: . - After using the command

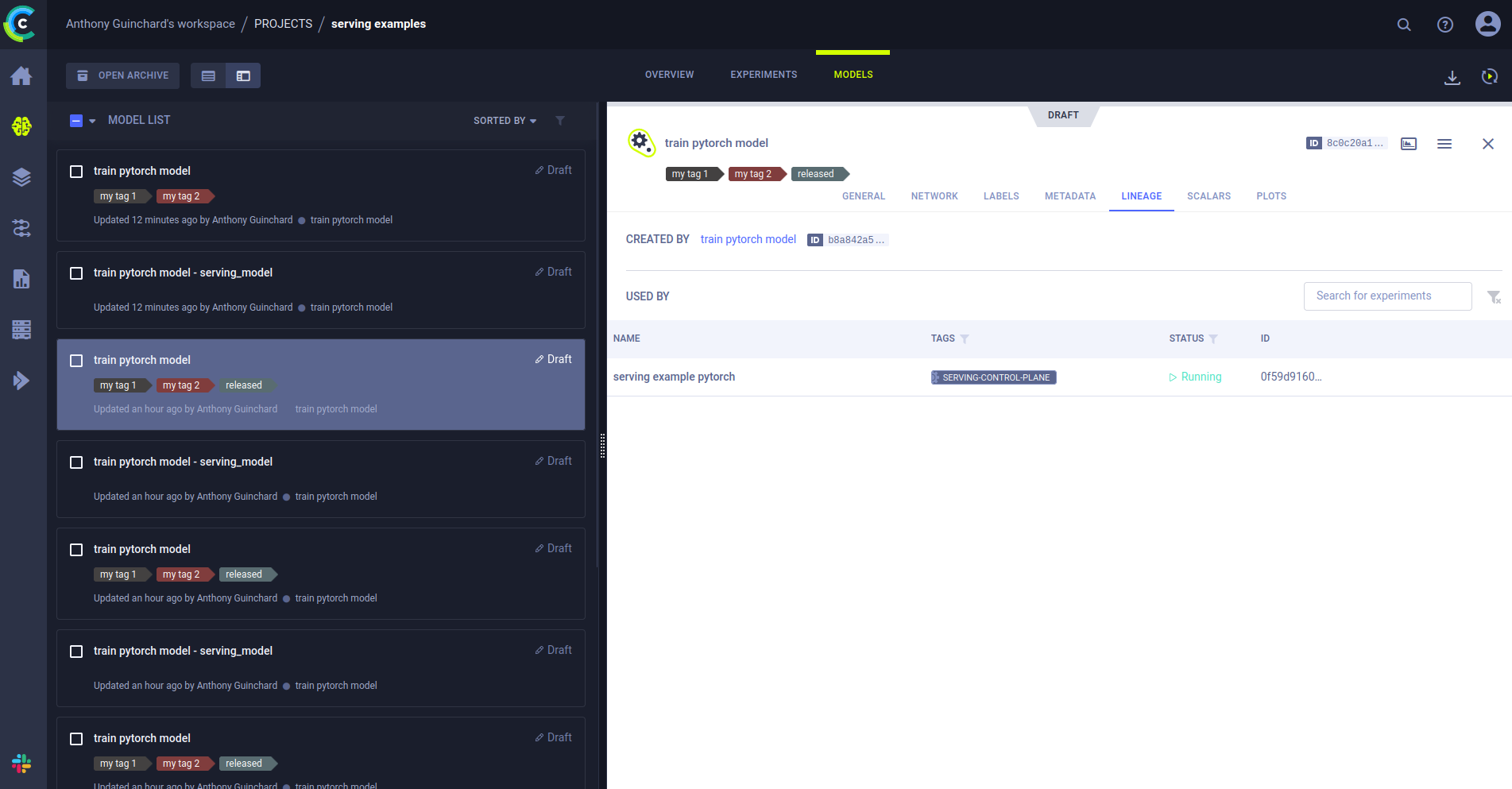

model auto-update, I trained several models and successively tagged them with "released". I noticed that, each time after training and tagging a new model, only latest trained model with tag "released" presents a link to the Serving Service under its "LINEAGE" tab. All other models that were previously trained and added tag "released" don't have this link under the "LINEAGE" tab, except the very initial model that was used to create the endpoint (see other pictures below ⤵ ). This makes me hope that the endpoint is effectively pointing towards the latest trained model with tag "released" 🙏 .

320 Views

0

Answers

one year ago

one year ago