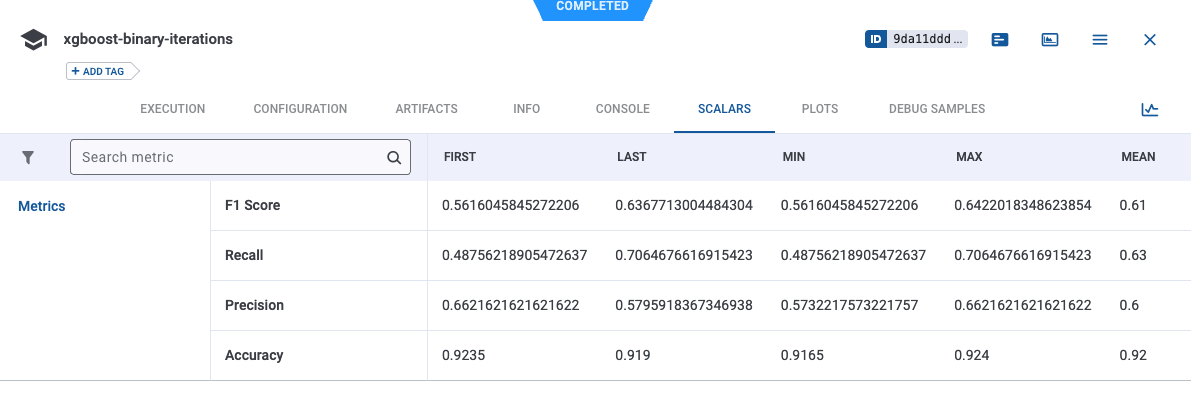

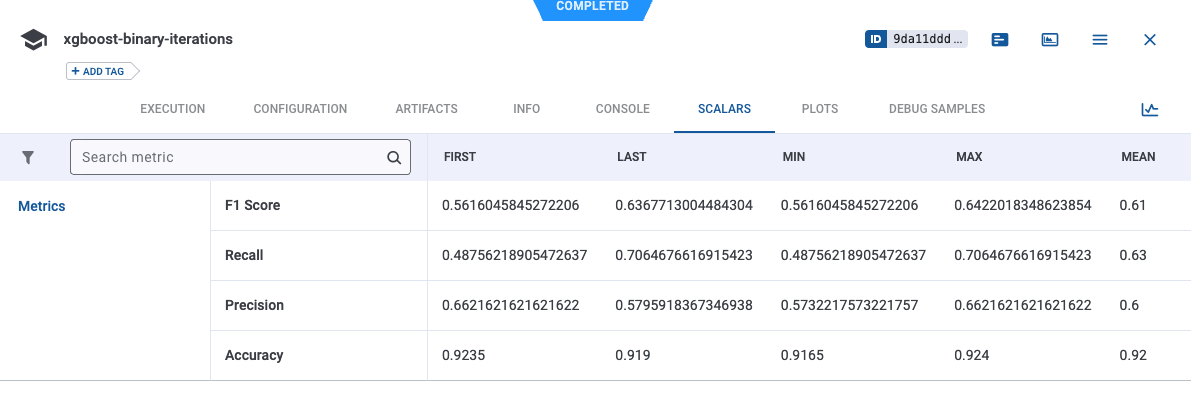

Here's what I see on the webUI, but no indication of the f1 score from each of the cloned optimization tasks:

Are the cloned tasks running? Can you add logs from the HPO and one of the child tasks?

Hi Again! I'm trying to figure out why my HPO doesn't seem to work for this simple xgboost example I'm testing -- I see that the tasks are being cloned from the base model, but I don't see any outputs indicating what the evaluation metric is (in my case, F1 ) for each of the cloned tasks and as such cannot decide which task/its hyperparameter ( scale_pos_weight ) performed the best:

Here's the base model:

from clearml import Task, Model

from sklearn.datasets import make_classification

from xgboost import XGBClassifier

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score, recall_score, f1_score, precision_score, roc_curve, auc

from utils.logging import run_and_log_eval_metrics, plot_and_log_auc

import matplotlib.pyplot as plt

import argparse

###### Argument Parser ########

ap = argparse.ArgumentParser()

ap.add_argument("-w0", "--weight_0", required = True, help="Y=0 Probability")

args = vars(ap.parse_args())

###### Enums ##################

RANDOM_STATE = 42

TEST_SIZE = 0.2

ITERATION = 0

SCALE_POS_WEIGHT = 1

CLASS_SEP = 0.7

NAME = 'xgboost-binary-train-base-model'

PROJECT_NAME = "XGBoost Experiments"

WEIGHT_0 = float(args["weight_0"])

WEIGHT_1 = 1-WEIGHT_0

WEIGHTS = [WEIGHT_0, WEIGHT_1]

##############################

# PART 0: Initialize ClearML

task = Task.init(project_name=PROJECT_NAME,

task_name=NAME)

logger = task.get_logger()

# Connect hyperparameters

params = {

'scale_pos_weight': SCALE_POS_WEIGHT

}

params = task.connect(params)

##############################

# PART 1: Generate a synthetic dataset with 2 classes

X, y = make_classification(n_samples=10000,

n_features=10,

n_informative=2,

n_redundant=2,

n_classes=2,

weights=WEIGHTS,

class_sep=CLASS_SEP,

random_state=RANDOM_STATE)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=TEST_SIZE, random_state=RANDOM_STATE)

##############################

# PART 2: Initialize XGBClassifier, Train/Fit Model

model = XGBClassifier(objective='binary:logistic',

random_state=RANDOM_STATE,

scale_pos_weight=params['scale_pos_weight'])

# Fit the model to training data

model.fit(X_train, y_train)

##############################

# PART 3: Save Model, Upload Artifacts + Evals to Server

# model.save_model(NAME + ".json")

# artifact = task.upload_artifact("model", NAME + ".json")

# Get predictions

preds = model.predict(X_test)

# Evaluation/Logging

run_and_log_eval_metrics(logger, y_test, preds, iteration=ITERATION)

with the utils functions produced by the below code:

from sklearn.metrics import accuracy_score, recall_score, f1_score, precision_score, roc_curve, auc

import matplotlib.pyplot as plt

def run_and_log_eval_metrics(logger, y_test, preds, iteration) -> None:

accuracy = accuracy_score(y_test, preds)

recall = recall_score(y_test, preds)

f1 = f1_score(y_test, preds)

precision = precision_score(y_test, preds)

logger.report_scalar(title="Metrics", series="Accuracy", iteration=iteration, value=accuracy)

logger.report_scalar(title="Metrics", series="Precision", iteration=iteration, value=precision)

logger.report_scalar(title="Metrics", series="Recall", iteration=iteration, value=recall)

logger.report_scalar(title="Metrics", series="F1", iteration=iteration, value=f1)

I'm trying to optimize for the highest f1 score:

from clearml import Task, InputModel

from sklearn.datasets import make_classification

from xgboost import XGBClassifier

from sklearn.model_selection import train_test_split

from utils.logging import run_and_log_eval_metrics, plot_and_log_auc

import argparse

from clearml.automation import UniformParameterRange, HyperParameterOptimizer

###### Argument Parser ########

ap = argparse.ArgumentParser()

ap.add_argument("-tid", "--taskid", required = True, help="Task ID")

args = vars(ap.parse_args())

###### Enums ##################

# RANDOM_STATE = 42

# TEST_SIZE = 0.2

# CLASS_SEP = 0.7

# WEIGHTS = [0.9, 0.1]

NAME = 'xgboost-binary-find-best-balance'

PROJECT_NAME = "XGBoost Experiments"

############################################

# PART 0: Initialize ClearML

task = Task.init(project_name=PROJECT_NAME,

task_name=NAME,

task_type=Task.TaskTypes.optimizer)

############################################

# PART 1: Run Hyperparameter Optimizer (HPO)

optimizer = HyperParameterOptimizer(

base_task_id=args["taskid"],

hyper_parameters=[

UniformParameterRange('General/scale_pos_weight', min_value=1.0, max_value=10.0, step_size=1),

],

objective_metric_title='Metrics',

objective_metric_series='F1',

objective_metric_sign='min',

max_iteration=10,

execution_queue='default',

optimize_task_parameters=True,

)

optimizer.start()

optimizer.set_time_limit(in_minutes=1.)

# wait until optimization completed or timed-out

optimizer.wait()

top_exp = optimizer.get_top_experiments(top_k=1)

print([t.id for t in top_exp])

# make sure we stop all jobs

optimizer.stop()

print('We are done, good bye')

Here's what I see on the webUI, but no indication of the f1 score from each of the cloned optimization tasks:

Are the cloned tasks running? Can you add logs from the HPO and one of the child tasks?