Reputation

Badges 1

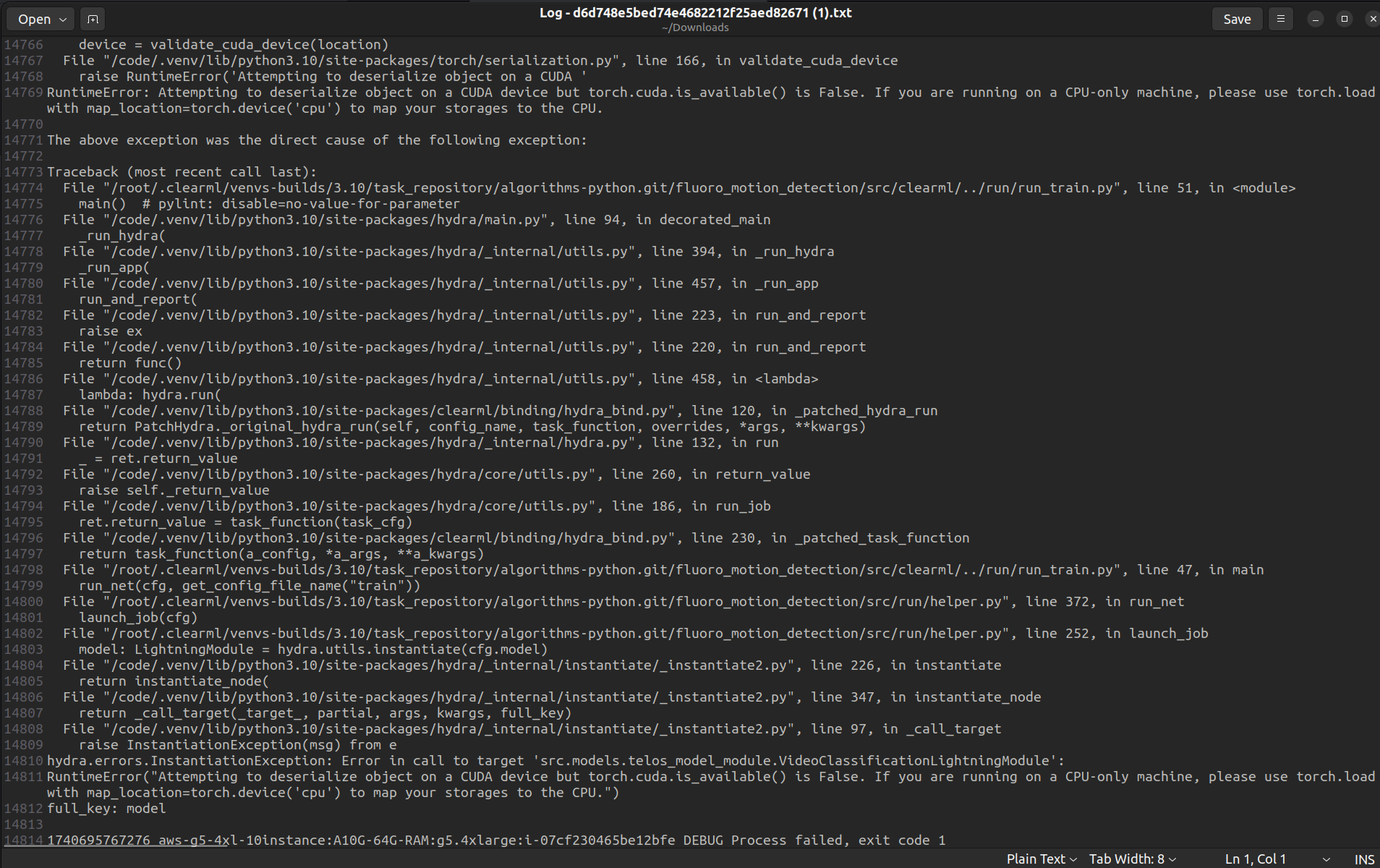

46 × Eureka!I got the same cuda issue after being able to use GPU

Actually never mind, it's working now!

And this issue happens randomly, I was able to run it again last night, but failed again this morning

Hi @<1523701070390366208:profile|CostlyOstrich36> , here it is

@<1523701205467926528:profile|AgitatedDove14> Yes I cansee the worker:

@<1523701087100473344:profile|SuccessfulKoala55> Hi Jake, I am using 1.12.0

@<1523701087100473344:profile|SuccessfulKoala55> Hi Jake, I tried to use --output-uri in clearml-task but got the same error clearml.storage - ERROR - Failed uploading: ' LazyEval Wrapper ' object cannot be interpreted as an integer

I was trying to run python main.py experiemnt=example.yaml

Hi @<1523701087100473344:profile|SuccessfulKoala55> , what preconfiguration is needed for the docker service to make? I've tried to run the docker pull manually in AWS EC2 with the same docker image without the space limit issue.

#

from typing import List, Optional, Tuple

import pyrootutils

import lightning

import hydra

from clearml import Task

from omegaconf import DictConfig, OmegaConf

from lightning import LightningDataModule, LightningModule, Trainer, Callback

from lightning.pytorch.loggers import Logger

pyrootutils.setup_root(__file__, indicator="pyproject.toml", pythonpath=True)

# ------------------------------------------------------------------------------------ #

# the setup_root above is...Hi @<1523701070390366208:profile|CostlyOstrich36> , any suggestion for this error?

Hi @<1523701070390366208:profile|CostlyOstrich36> Any idea why this happen?

It seems like CPU is working on something, I saw the usage is spiking periodically but I didn't run any task this morning

screenshot of AWS Autoscaler setup, cpu mode is NOT enabled

@<1523701070390366208:profile|CostlyOstrich36> Isn't the docker extra arguments only takes docker run command instead of dockerd ?

I see, seems like the -args for scripts didn't passed to the docker:

--script fluoro_motion_detection/src/run/main.py \

--args experiment=example.yaml \

@<1523701070390366208:profile|CostlyOstrich36> yes, in the end of the new file

Hi @<1523701435869433856:profile|SmugDolphin23> I see, but is there anyway to see the overridden config in OmegaConf so I can easily compare the difference between 2 experiments?

it has been pending whole day yesterday, but today it's able to run the task

Here it is @<1523701205467926528:profile|AgitatedDove14>

I did use --args to clearml-task command for this run, but it looks like the docker didn't take it

okay, when I run main.py on my local machine, I can use python main.py experiement=example.yaml to override acceleator to GPU option. But seems like the --args experiement=example.yaml in clearml-task didn't work so I have to manually modify it on UI?

clearml-task \

--project fluoro-motion-detection \

--name uniformer-test \

--repo git@github.com:imperative-care-campbell/algorithms-python.git \

--branch SW-956-Fluoro-Motion-Detection \

--script fluoro_motio...There is nothing on the queue and worker

Hi @<1808672071991955456:profile|CumbersomeCamel72> , the error instance is launched from ClearML AWS Autoscaler on the webpage. The sucessful mounted instance is launch manually from AWS web

@<1808672071991955456:profile|CumbersomeCamel72> It can be mount without docker, but can't be mounted if I run a docker on the instance