Hi @<1523701070390366208:profile|CostlyOstrich36> Any idea why this happen?

one thing I've changed is the AMI for the autoscaler, I changed it from amazon linux to ubuntu linux since my docker file size exceed the limit of the amazon linux. Not sure if this has anything to do with this issue

Can you add here the configuration of the autoscaler?

@<1523701070390366208:profile|CostlyOstrich36> yes, in the end of the new file

@<1597762318140182528:profile|EnchantingPenguin77> , are you sure you added the correct log? I don't see any errors related to cuda

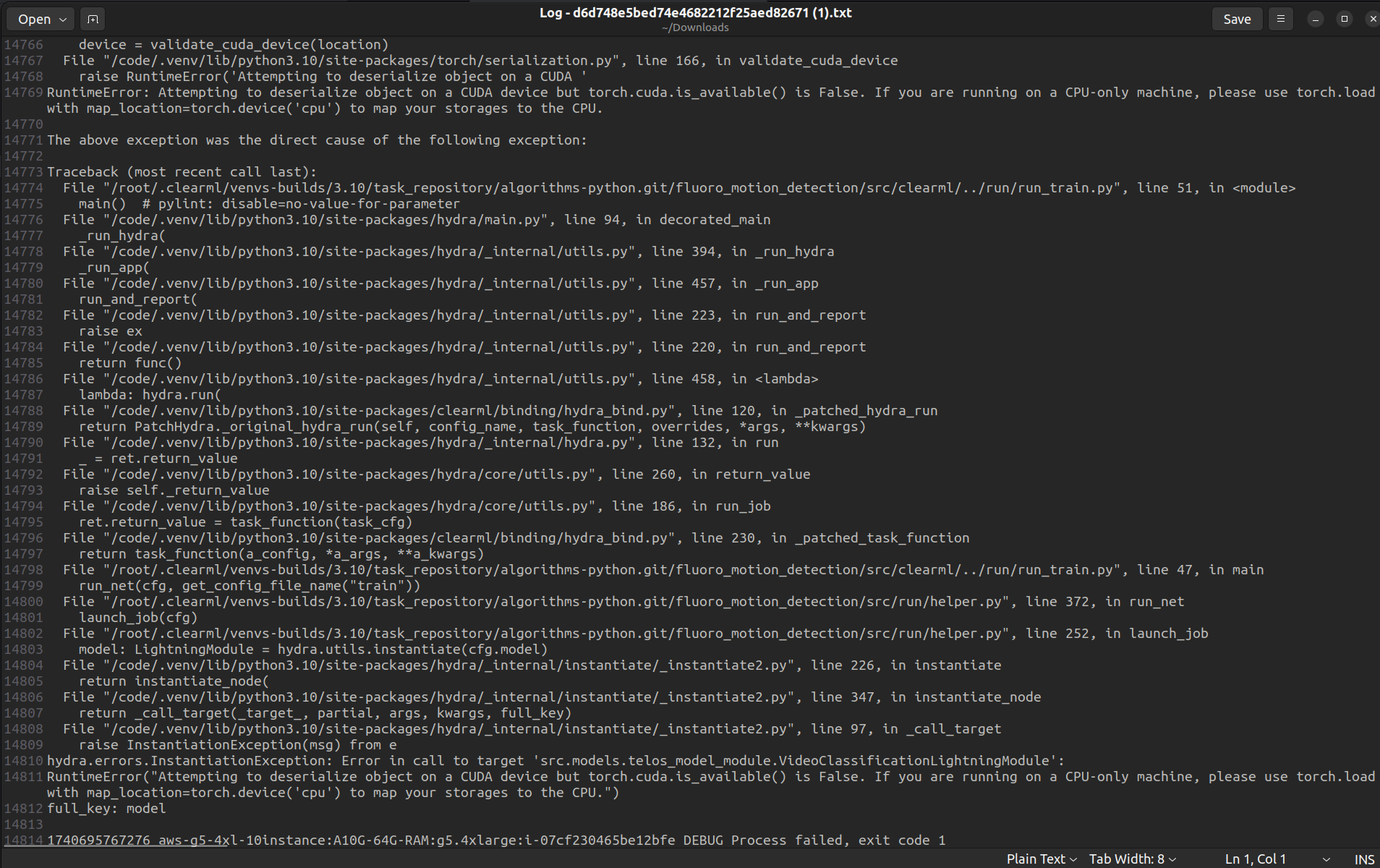

@<1523701070390366208:profile|CostlyOstrich36> sorry wrong log uploaded, here is the error:

RuntimeError: Attempting to deserialize object on a CUDA device but torch.cuda.is_available() is False. If you are running on a CPU-only machine, please use torch.load with map_location=torch.device('cpu') to map your storages to the CPU.

screenshot of AWS Autoscaler setup, cpu mode is NOT enabled

Hi @<1523701070390366208:profile|CostlyOstrich36> , here is the configuration. The GPU could be found sometimes when I clone the previous successful run, but the GPU was found randomly. Also I am unable to run multiple task at the same time even with cloning the previous run

Hi @<1597762318140182528:profile|EnchantingPenguin77> , I don't see any errors related to CUDA in the log

And this issue happens randomly, I was able to run it again last night, but failed again this morning