Our jobs are now running on the online app 👏

Thank you

Unfortunately, the issue is only partially resolved: while some jobs are running on one instance, on another instance (default_gpu), our jobs are still pending… 😢

@<1855782485208600576:profile|CourageousCoyote72> , @<1837300695921856512:profile|NastyBear13> , @<1855782492460552192:profile|IdealCamel90> we've just released v2.0.1 which should affect this issue

Is there a way to override the version of clearml-agent that gets installed on the worker?

Hi everyone, it seems the

clearml_agent: ERROR: Could not install task requirements!

expected SCALAR, SEQUENCE-START, MAPPING-START, or ALIAS

issue is related to some verbose printing failure which we can't reproduce, but we have a pretty good idea where it happens. We'll release an RC of the agent soon to contain this issue, and would appreciate anyone who can reproduce the issue to try it out and let us know if a formal version should be released

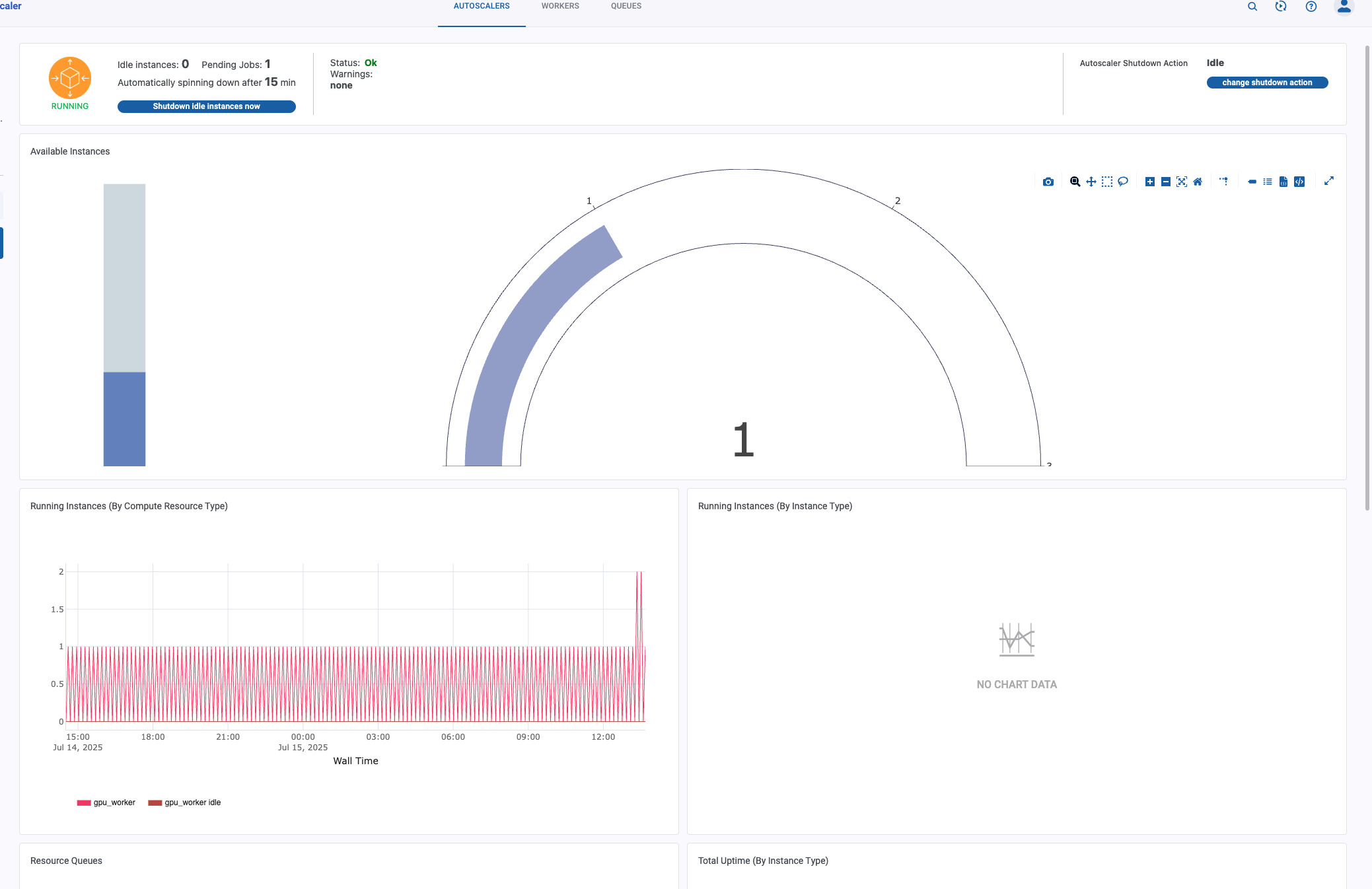

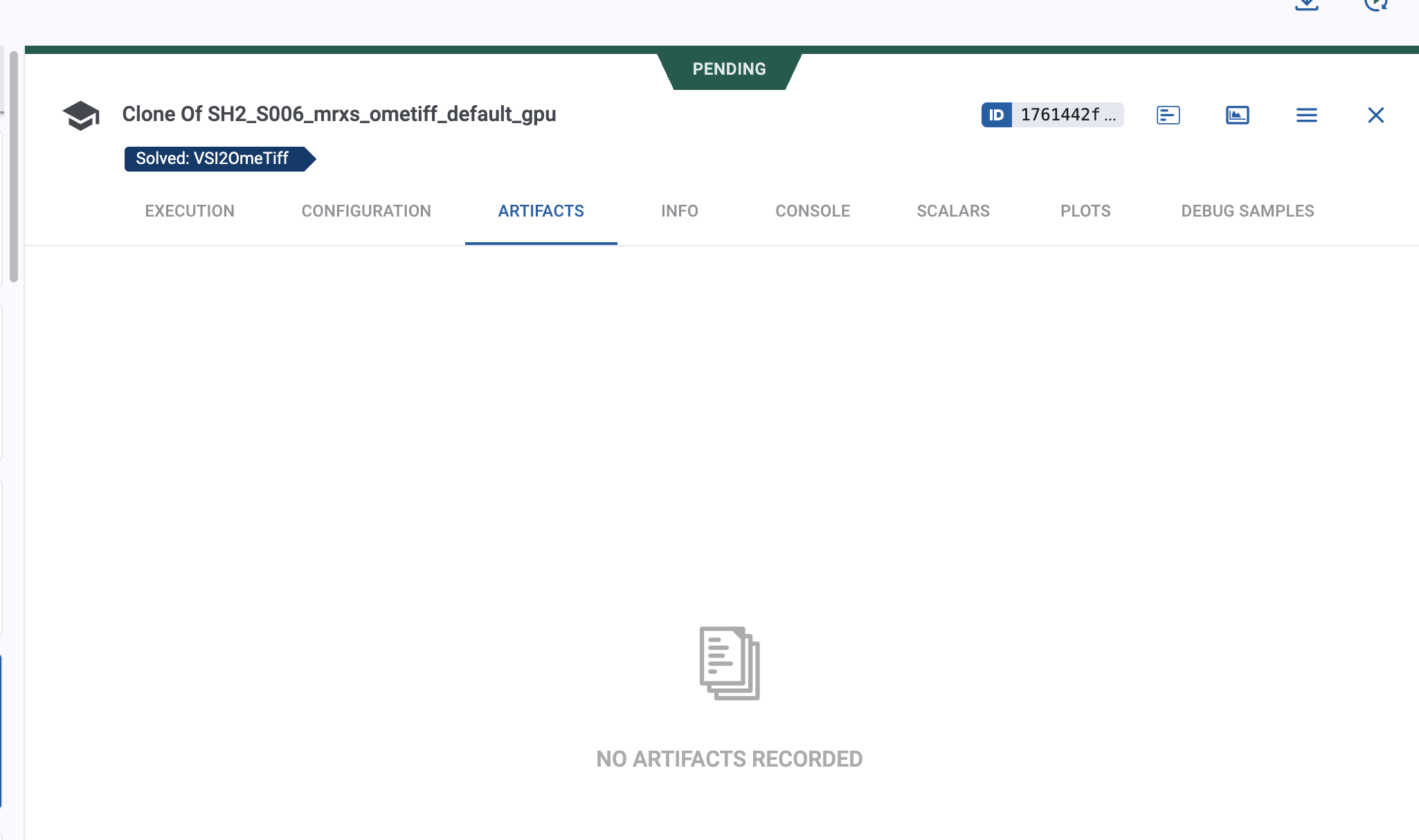

I do not see any artifacts linked to the jobs in the default_gpu queue. We have not changed the configuration; as a debugging step, we simply restarted the instance.

It now actually runs jobs but

@<1523701087100473344:profile|SuccessfulKoala55> I get:

clearml_agent: ERROR: Could not install task requirements!

expected SCALAR, SEQUENCE-START, MAPPING-START, or ALIAS

Which previously I didn't get

Previously version was 1.9.3, can we still run that clearml agent version through autoscaler?

Hi all, ClearML Agent v2.0.2rc0 is out - if you can try it out and let us know whether the issue is resolved, we'll appreciate it 🙏

Hello

Sorry for my late reply.

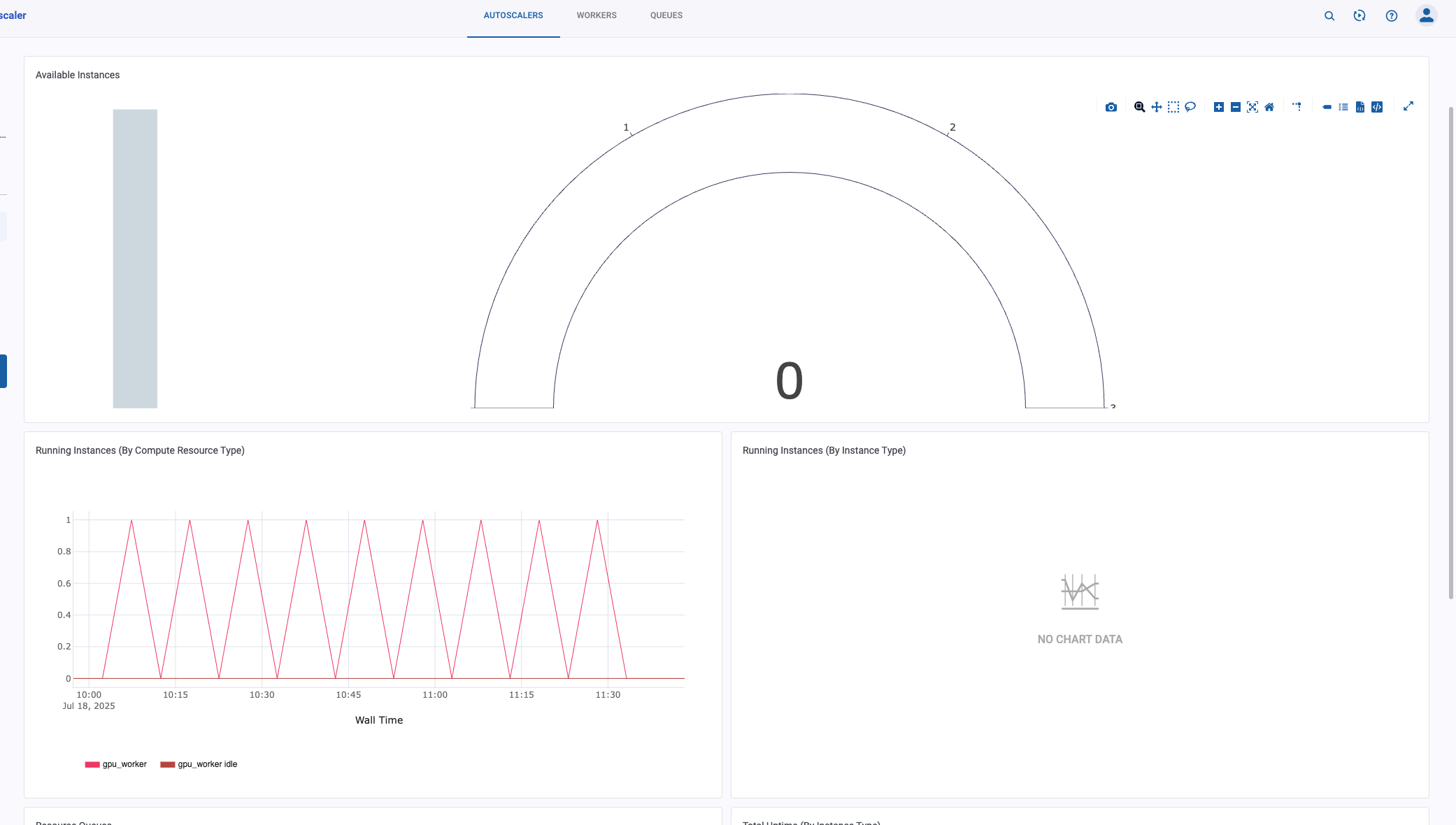

I’m running into an issue with my default_gpu queue: the ClearML auto-scaler detects the job and puts it into the “Pending” state, but it never actually runs. From the auto-scaler logs (see screenshot 1), this seems expected since it only checks the queue every 5 minutes. I’ve also attached the relevant log file.

However, I don’t see anything in the logs that clearly explains the problem. Looking at AWS, I can see that the instance starts, stays in “Initializing” for a while, and then terminates.

Sorry if this isn’t very clear, but I hope this info is still helpful!

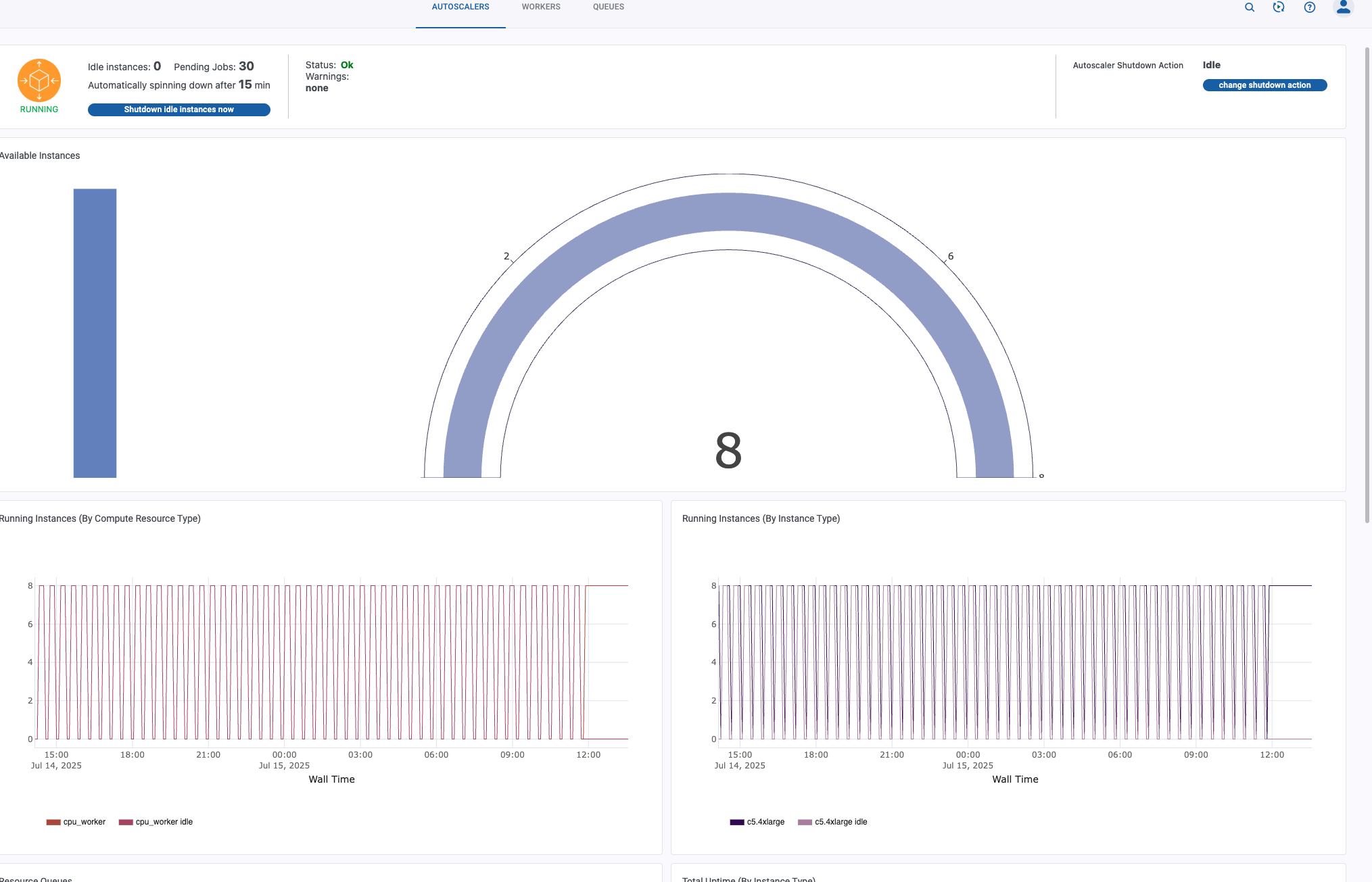

Hi all, we're looking into it right now

It looks like the task.execute_remotely() method is somehow broken. Previously, when I used it, the task would run in the queue with the same parameters I set locally. But now the parameters are not being passed correctly, and I end up with two tasks: one that I launched locally (but it ends up running remotely), and another one — but without any parameters at all.

It's strange — how could the agent update have affected this?

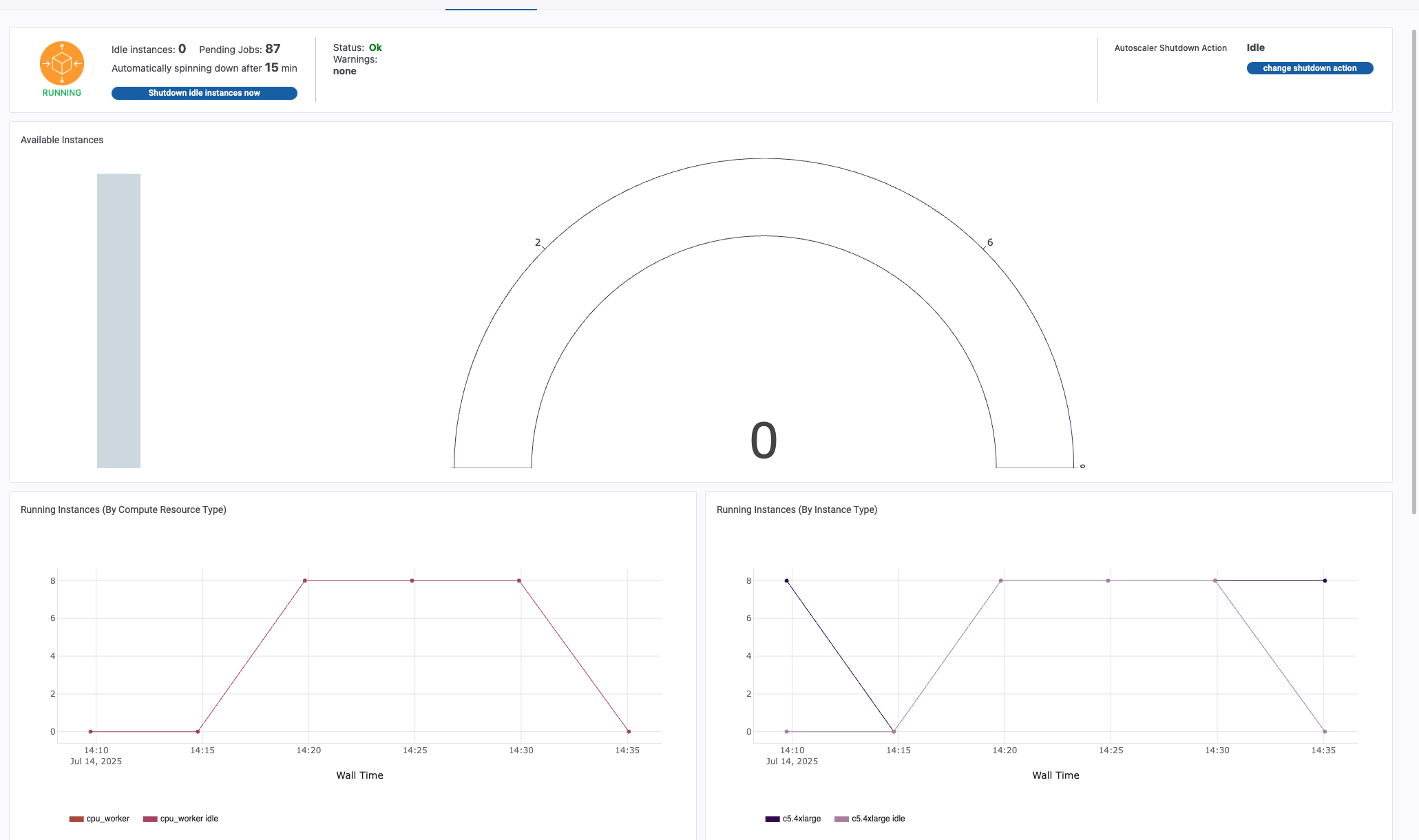

@<1855782485208600576:profile|CourageousCoyote72> , do you see crashes or machines going up or down? Or are just machines from EC2 are not being allocated?

Same happened to us, today.

Usually everything works great but now autoscaler is just starting instances but running nothing.

Thank you for the detailed explanation. Can you please add a log of the ec2 instance itself? You can find it in the artifacts section of the autoscaler task. Is it the same autoscaler setup that used to work without issue or were there some changes introduced into the configuration?

Another problem surfaced: same tasks that previously ran normally, now are failing with this error log

"""

clearml_agent: ERROR: Could not install task requirements!

expected SCALAR, SEQUENCE-START, MAPPING-START, or ALIAS

""""

Hi,

It looks like the same issue is happening. It seems to be caused by the recent update of the clearml-agent package to version 2.0.0 .

When I start the queue locally, the agent appears in the list but doesn't pick up any tasks. On the agent side, I get the following error:

FATAL ERROR:

Traceback (most recent call last):

File "***.venv/lib/python3.12/site-packages/clearml_agent/commands/worker.py", line 2128, in daemon

self.run_tasks_loop(

File "***.venv/lib/python3.12/site-packages/clearml_agent/commands/worker.py", line 1464, in run_tasks_loop

self.monitor.setup_daemon_cluster_report(worker_id=self.worker_id, max_workers=1)

File "***.venv/lib/python3.12/site-packages/clearml_agent/helper/resource_monitor.py", line 283, in setup_daemon_cluster_report

self._cluster_report.pending = True

^^^^^^^^^^^^^^^^^^^^^^^^^^^^

AttributeError: 'NoneType' object has no attribute 'pending'