@<1855782479961526272:profile|CleanBee5> , I'm guessing that the files server (debug samples are saved there by default) is experiancing load due to the amount of debug samples.

Regarding the size, ClearML SDK only logs/uploads whatever you create, so if you want less load, you can also use smaller/lighter images.

We are not using fileserver to store the images

We are using S3, the image loading itself is fast, aroung 100ms

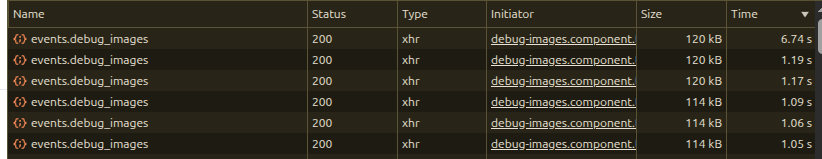

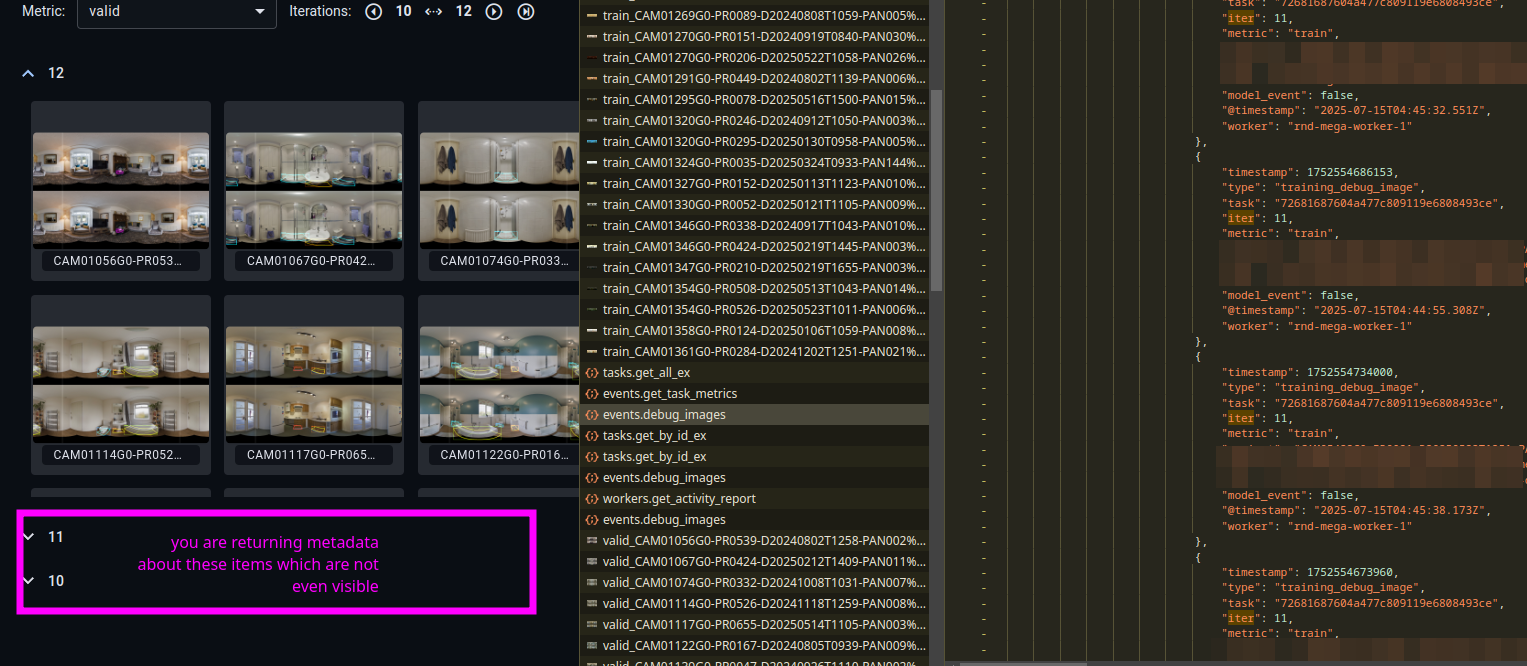

What is slow is the request to server which creates a gigantic json that is sent back.

Saying to use lower res and smaller amount of images is like saying "then dont use clearml to look at images"

@<1855782479961526272:profile|CleanBee5> , in that case you should beef up the resources running the apiserver.

In the end of the day, this 2-3mb payload basically contains all the events (including debug samples metadata + links) of thousands of iterations. You could modify FE code to load a smaller range of iterations or remove some metadata (the metadata should be minimal, such as iteration and name of the metric)

Additionally, I believe the enterprise version supports rolling indexes.

Not a solution, but just curious: why would you need that many "debug" images ?

Those are images automatically generated by your training code that ClearML automatically upload them. May be disable auto upload image during Task Init ?

then dont use clearml to look at images

I don't think ClearML is design to vizualize millions of image per task. At least not the Debug samples section. That was design so that you can see for a given set of image, how does the model perform epoch after epoch.

For vizu millions of image, you have tool like Fiftyone.